LLMs in mid-2025

Let me start by saying I have no idea what I'm talking about when it comes to LLMs. The whole landscape is changing so fast, and information/sentiment is so mixed that it feels almost impossible to have a confident opinion.

Level setting

A couple of things I believe (?) to be true right now:

- Most companies are pushing AI features and LLM use as a way to extract more value from fewer workers. This is bad. Full stop.

- Language models are useful tools that have no intelligence. There is still a ton of utility there, but decision-making and anything else that requires intelligence should not be left to an LLM.

- We should be thoughtful about AI energy consumption, but maybe it's not as bad as some folks make it out to be (this is one that I would like to understand better).

- The massive corpus of training data that is open source makes some of the ethical concerns about LLMs less tricky for software development. We legally gave away our code for free. This is different from artists who are having their work stolen.

Fly.io’s “My AI Skeptic Friends Are All Nuts”

That said, I recently re-posted an article titled My AI Skeptic Friends Are All Nuts. Afterward, a handful of friends who I respect and admire commented either publicly or privately that the article did not speak to them in the way that it spoke to me. I thought that was interesting, and I wanted to take a little more space than 300 characters to explore…

The major points outlined in the blog post are:

- Using an LLMs for code in June 2025 is very different from 6 months ago (much much much better)

- LLMs are good at writing tedious code, letting you build things that you might otherwise never have actually gotten to

- Not all code generated by an LLM is going to be good. You need to code review it. This is true of all code.

- Hallucination is less of a problem in the world of "agentic" AI that can run linters, unit tests, static analysis, etc. If the LLM gets it wrong the first time, it can run one (or all) of those tools and try again until it gets it right.

- LLMs are better at writing code that has lots of training data and even better at generating code where there is a well-established convention in place. That means sometimes they're not the right tool for the job.

- Just like not all furniture has to be handmade using Japanese joinery techniques, not all code has to be lovingly crafted by hand. (This is one I have a bit of an issue with. More on that later.)

- LLM code is often mediocre. Sometimes that's fine. Often times that's a better starting point than a complete blank slate.

- It doesn't matter that LLMs are not intelligent and will probably never be AGI. They still have real utility right now.

- AI tools will definitely take jobs. That could be bad. Maybe new jobs will be created in their place, as has been the case in the past with technology advances, but maybe not.

- Training on open source is different from training on stolen art. Also, the tech industry has shown a real disregard for IP law so maybe there's some throwing stones from glass houses going on here. (Not sure I fully agree with this one, but it's an interesting point.)

- A real shift is happening, and we need to accept that. LLMs are already better at some things (like debugging using long log output).

- Boy it sucks to constantly hear about AI.

I agree with almost all those points. Maybe the way Thomas Ptacek conveyed those points was kinda off-putting, but honestly, I think getting yelled at a bit was exactly what I needed.

I actually think the title, “My AI Skeptic Friends Are All Nuts,” paired with the tone, was part of what made me like the article. I’m imagining my friend, Thomas (who in reality I don’t know at all), looking at me pounding on the “but they don’t have any real intelligence” drum, and just thinking, “why is Chris being such an idiot right now?”

A side-note on craftmanship

This is the one point that I mostly disagree with. In the article, Thomas says:

Steve Jobs was wrong: we do not need to carve the unseen feet in the sculpture. Nobody cares if the logic board traces are pleasingly routed. If anything we build endures, it won’t be because the codebase was beautiful.

I think you can build software without much care for the craft. But I think caring about the craft makes it better. I think that a belief in craftsmanship is what makes Laravel better. I think caring about the logic board traces made Apple better. I think that we’ve built an enduring product at InterNACHI because we care about the craft.

And you can use an LLM and still care about the craft. In fact, I think you can spend even more time on the craft with the help of AI. Use it as a tool. Use it to get started, or explore, or get unstuck. Use it to build the part that's holding you back from making something great. It's a tool, and we're craftspeople. Use it well.

AI for me right now

Right now, I'm using a bunch of different language models day-to-day:

- For certain questions, especially when I know that there is a single, well-established answer, I find myself jumping to Raycast’s AI panel much more often than bothering to use web search. I like that Raycast gives me access to all the major models in one place. For code-related questions, I usually reach for Claude. For other things, often the OpenAI models are faster/better.

- For agents, I've tried Jetbrains Junie, Cursor, or codename goose. Goose is neat in that it's open source and extensible. I like it in theory, but right now it's just not that great. I used Cursor for a few experiments, and liked the agent but hated the IDE. Now that Jetbrains has released Junie, I've canceled my Cursor Pro subscription and going all-in on that. In general, my feeling is that each agent is going to excel at different things, so you just have to pick the one that works for your workflow.

My heuristic

In my experience, LLMS excel at producing code that is unremarkable. But programming needs lots of unremarkable code: controllers, request objects, config files, typescript interfaces, github actions, docker files, etc, etc.

The more commonplace the code, the better.

For right now, when I have unique, human problems, I rarely reach for AI. And when I do, it usually disappoints. Figuring out complex interaction design for a niche feature that's unique to the small industry that I work in is not what this tool is good at. Maybe some day, tools under the umbrella of “artificial intelligence” will be good at that. Not today.

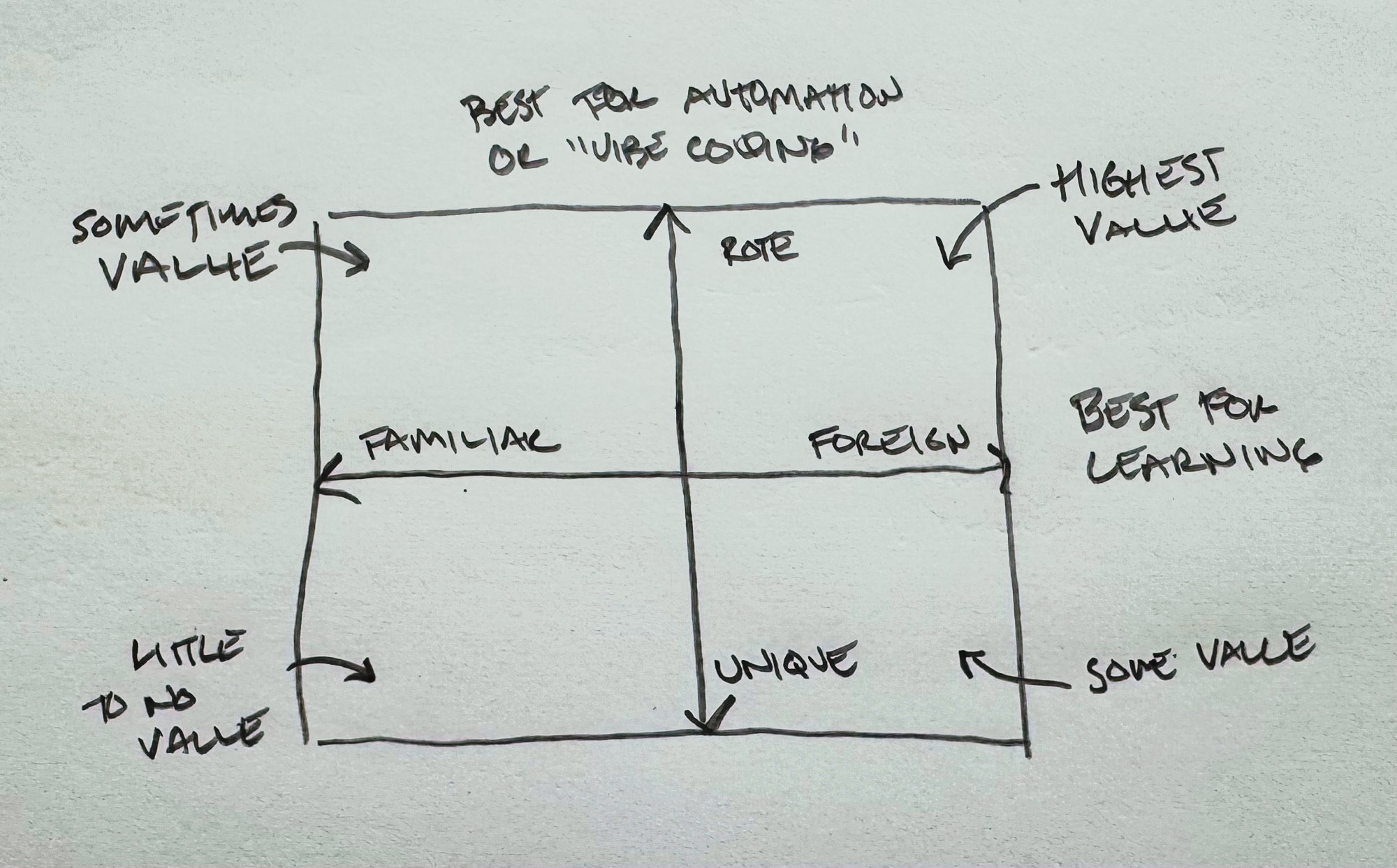

A couple of months ago, I sketched this graph (while I was on vacation), and I think it still really holds up:

The higher up a task is on the vertical axis (unique vs. rote), the more likely an agent can help write the code. The further along on the horizonal axis (familiar vs. foreign), the more likely LLMs are to help you learn/understand something new (both agents or just LLM chatbots).

Building momentum

But the thing that I hadn’t yet realized when I drew my chart is that agents also unlock one more thing: they build momentum. So often, I'll head off on a side quest and by the time I have all the models/controllers/requests/templates/etc just stubbed out, all my enthusiasm is gone. Disgusted, I close the project and toss it into the pile of other fun ideas that lost steam.

Using an agent like Junie lets me jump right past this pit. Instead of asking “which Laravel starter kit do I use?” I can jump right to experimenting with the idea that got me excited in the first place. So far, that's been a joy.

So anyway

To the Chris Morrell of January 2025, I have this to say:

Dear Chris,

Stop acting nuts. Large language models are a powerful tool that you need to figure out. It doesn’t matter that they're not intelligent. Of course, you still need to review the code they generate. It's OK that they hallucinate—you can just work around that. They're ultimately going to let you do more of what you love and less of what you hate.

Love,

Chris